Transhumanism

Wednesday, October 10, 2007

Friday, October 27, 2006

Cryonics

Cryonics is a medical procedure aimed at preserving the bodies of terminal patients until such time as advanced medical procedures can provide for their resuscitation. To revive a patient in a state of cryopreservation would require not only curing the cause of death, which would be the easy part, but also reversing the damage caused by storing the body in liquid nitrogen at temperatures below -200 C.

A process resembling cryonics was envisioned by Benjamin Franklin as early as 1772. Franklin wrote to a friend:

“I wish it were possible… to invent a method of embalming drowned persons, in such a manner that they might be recalled to life at any period, however distant; for having a very ardent desire to see and observe the state of America a hundred years hence, I should prefer to an ordinary death, being immersed with a few friends in a cask of Madeira, until that time, then to be recalled to life by the solar warmth of my dear country! But… in all probability, we live in a century too little advanced, and too near the infancy of science, to see such an art brought in our time to its perfection.”

Surprising to reflect upon the possibility that a beta version of Franklin’s desired cask exists today in the form of Bigfoot Dewars, the thermos-like vacuum flasks in which cryonics patients are stored.

Bigfoot Dewar

There are no shortage of critics of cryonics who maintain that it would be impossible to revive someone placed in suspension regardless of any future technological developments. Dr Arthur Rowe, a cryobiologist often quoted by opponents of cryonics, claimed that believing cryonics could reanimate someone who had been frozen is like “believing you can turn hamburger back into a cow.” On a similar note, I was actually taught in high school by my physics teacher that cryonics could never work because when you freeze human tissue ice crystals form causing irreparable damage to the cells. As it turns out, these might have been a pertinent issues with early, failed experiments in cryonics, but they do not concern the methods used at Alcor, established in 1972. There is currently a process of tissue preservation in use called vitrification that preserves cells in a glassy solid state without the formation of ice crystals.

Some of the evidence supporting the feasibility of cryonics came as early as 1984, when a cardiothoracic surgery researcher at the UCLA school of medicine named Jerry Leaf helped develop a cryopreservant blood substitute shown to be capable of sustaining life in dogs for up to four hours. Leaf, who was involved in special operations during the Vietnam War that had over a 50% mortality rate, stated in an interview in 1986,

“I left my fear of death somewhere in the jungles of Vietnam. To this day, I have absolutely no fear of death, only the fear of not being able to save someone else that I care about. It’s not that I don’t want life for myself, because I do very much. I only have positive feelings towards life. I want more of it.”

Leaf was himself cryopreserved at Alcor in 1991 following a fatal heart attack.

Jerry Leaf with a survivor of reanimation

More recently, an article published in 2006 in Wired Magazine reports that Hasan Alam, a trauma surgeon at Massachusetts General Hospital, has successfully suspended and revived pigs, over two hundred of them, for an hour each. This evidence all demonstrates that stopping the heart from beating does not positively denote the irremediable termination of life, if the existence of present-day defibrillators and heart transplant surgery weren’t already a sufficient demonstration of that fact.

A cryonics policy at Alcor can be secured through a life insurance policy, meaning that it can be paid for over the course of 25 years. If one happens to be younger than 60, chances are that if one started making monthly payments now, for about the cost of a cable television bill one could pay off a cryonics policy well before the actual medical intervention is needed. Some might contend that such a considerable sum of money would better be spent on those in dire poverty rather than invested in a method of life extension that’s never been demonstrated to work. However, those same voices of dissent will routinely spend the same amount, if not greater sums, on medical bills to extend the length of morbidity—that is, adding extra months and years to the time of greatest physical pain and mental deterioration.

To say that human civilization will never devise a means to revive patients from cryopreservation is an extreme statement, considering that before the 20th century heavier-than-air flight was generally considered scientifically impossible. There was no precedent for it in nature and no sign of its existence before the Wright brothers engineered its invention. For a terminal patient to wait in cryonic suspension for the entirety of the 21st century without hope of revival would require the extinction of our culture’s concern for scientific inquiry. All the available evidence points to its one-day being attainable. Imagining that one is granted the freedom to choose between suspension and burial, I would argue that there is an obvious rational basis to opting for cryonics.

Links to further material:

Alcor's website

The Molecular Repair of the Brain by Ralph Merkle

Death in the Deep Freeze television documentary

Suspended Animation by Vitrification video presentation by Brian Wowk at the Immortality Insitute conference

Wednesday, October 25, 2006

Evolutionary Development

Unlike the meaningful designs lying behind human creativity, no intelligent decision-making underlies the process of evolution. Species evolve as the result of random mutations. Those mutations that ensure fitness are passed on, while those deleterious to survival prove short-lived. Here we find a two-sided process is at work. Random experimentation drives evolutionary change, while development prunes variety down to the optimum. These two factors work together to produce the phenomenon of evolutionary development.

In the early stages of an evolutionary paradigm, one finds a larger degree of variation before development pares down design to optimum efficiency. For instance, in some lizards one finds a vestigial third eye on top of the head. The functional photosensory organ found atop the heads of some lizards lends evidence to the supposition that nature throws out all sorts of variations upon a common theme before a dominant paradigm develops, like that of dual forward-facing eyes.

Certain factors in the evolutionary development of dinosaurs suggest those animals were converging upon evolutionary paradigms more closely resembling our own. The body plans of the Troodon, a relatively small pack-hunter of the late Cretacious period, are conspicuously anthropomorphic by design. These creatures had the largest known brains of any dinosaur, sported forward-facing eyes. Paleontologist Dale Russel assembled a nearly complete Troodon skeleton in 1969. He speculated that had the species not been killed off by a meteorite extinction event, the evolutionary result would have been an intelligent “Dinosauroid.”

The dinosauroid hypothesis

If Russel’s intuitions are accurate, the Dinosauroid hypothesis has profound implications for the future trajectory of the human species. If the vagaries of blind evolutionary selection predictably settle upon dominant archetypes, such as those found in large-brained mammals, there may well be a general path along which intelligent species are destined to follow. The best way to verify the hypothesis would be to look at examples of extraterrestrial intelligent life. But first we might ask just how likely we would be to find the existence of ETI’s in the first place.

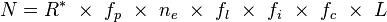

In 1961, astronomer Frank Drake conceived of an equation for estimating the number of intelligent civilizations in our galaxy by multiplying the number of stars in the Milky Way by the fraction of stars that have orbiting planets, the average number of planets capable of sustaining life, and the fraction of those civilizations currently communicating via electromagnetic radiation. The resultant Drake Equation includes many uncertain variables, for there is no way of knowing how likely life is to evolve on a planet like the earth, or if radio transmission is an inevitable convergent phenomenon arising from intelligence. Drake estimated there should be around ten thousand radio-capable civilizations in the galaxy. Carl Sagan guessed one million.

The Drake equation states that:

where:

N is the number of civilizations in our galaxy with which we might expect to be able to communicate at any given time and

R* is the rate of star formation in our galaxy

fp is the fraction of those stars that have planets

ne is average number of planets that can potentially support life per star that has planets

fl is the fraction of the above that actually go on to develop life

fi is the fraction of the above that actually go on to develop intelligent life

fc is the fraction of the above that are willing and able to communicate

L is the expected lifetime of such a civilization

SETI observations have been conducted at the 305m radio telescope at the Arecibo Observatory in Puerto Rico

The implications of the Drake equation were a motivating factor behind the SETI Institute’s search for extraterrestrial intelligence. SETI’s senior astronomer, Seth Shostak, in a New Scientist article entitled “ET First Contact Within 20 Years,” cited the Drake and Sagan estimates as likely bounds within which one could expect to find the actual number of humanlike alien civilizations. Ray Kurzweil notes in The Singularity is Near, “The assumption behind SETI is that life—and intelligent life—is so prevalent that there must be millions if not billions of radio-capable civilizations in the universe… Not a single one of them, however, has made itself noticeable to our SETI efforts thus far” (347). In 1950, Enrico Fermi famously asked, “Where is everybody?” The question proves even more puzzling today, now that SETI has provided evidence that we are alone.

The Acceleration Watch webpage

The Acceleration Watch webpageOne common response to the Fermi paradox is that earth may well be the first planet in the universe to carry intelligent life. For if an intelligent species within this galaxy developed advanced technologies thousands of years before humans, let alone millions, we should have heard from them by now. Futurist John Smart, president of the Acceleration Studies Foundation, has proposed an alternate solution to the Fermi paradox, one that does not require humankind to have preternaturally “lucked out.” Smart’s universal transcension scenario hypothesizes that as intelligent life develops advanced technologies, these civilizations choose not to spread throughout the universe. Rather, they delve into inner and virtual space, essentially escaping this universe.

Futurist John Smart, President of the Acceleration Studies Foundation

Smart describes universal transcension as an inevitable result of Convergent Evolution. As life evolves, it undergoes what Smart has termed MEST compression, yielding greater efficiencies of matter, energy, space, and time. Seen this way, both the evolutionary development of living species and the accelerating progress of information technologies follow the same paradigm of increasingly efficient design. The reason that homo sapiens dominate the planet is because of their MEST efficient phenotype. The body plans of humans are ideal, when compared to their extant earthbound alternatives, for developing intelligent machines. As a natural result, the next step in life on earth must necessarily be human equivalent AI. We should expect self-modifying artificial intelligence to be more interested in modifying its MEST efficiency by iteratively redesigning its hardware. This course will take intelligent life deeper and deeper into innerspace.

Audio and power point slides of John Smart's presentation on MEST compression at the Singularity Summit at Stanford

Expanding through space would not only be a waste of time and energy to our superintelligent progeny, the trip would prove dangerous and unenlightening. Forget trashing the universe with our genes, memes, and other viral junk. Had another civilization been so messy, ours would never have seen the light of day. In thinking about the future, we ought to consider nanometers, nanoseconds, and the infinite expanses of virtual reality. If convergent evolution demonstrates anything, it is that the definition of vision in future intelligences will reveal vast and complex vistas in the hospitable domains of the tiny and invisible.

Sunday, September 24, 2006

Nanotechnology

When it has been fully developed, molecular nanotechnology will be able to create almost any structure consistent with the laws of physics and chemistry. Nanotech assemblers will do so by manipulating individual atoms, building physical materials from the bottom up through the precise placement of particular molecules. In terms of the quality of products such molecular manufacturing will be able to generate and the efficiency with which assemblers will convert cheap feedstock into valuable materials, molecular nanotechnology will offer unprecedented economic benefits. The ability to rapidly produce and disseminate digitized information will have migrated into the realm of physical object space. Understandably, the results of such a phenomenon are both encouraging and worrisome.

Richard Feynman, on the bongos

Delivered in 1959, one early proposal for storing information by means of precise atomic manipulation was Richard Feynman’s informal conversation piece entitled “There’s Plenty of Room at the Bottom.” There, the influential physicist remarked, “Put the atoms down where the chemist says, and so you make the substance. The problems of chemistry and biology can be greatly helped if the ability to see what we are doing, and to do things on an atomic level, is ultimately developed—a development which I think cannot be avoided.” Feynman offered a thousand dollar prize to anyone who could take a page of text and reduce the size by a scale of 25,000. That level of miniaturization would allow for the entire Encyclopedia Britannica, all twenty-four volumes worth, to be written on the head of a pin. This Feynman prize was claimed by a Stanford graduate student named Tom Newman, who in 1985 wrote out the first page of A Tale of Two Cities in the space of a 1/160 mm square. He was using electron beam lithography intended for making integrated computer circuits.

"It was the best of times, it was the worst of times," written by Charles Dickens with the help of Tom Newman using an electron beam lithography machine with a reduction size of 25,000 to 1

The term nanotechnology to describe the creation of working machines on the nanometer scale was coined by MIT engineer Eric Drexler. For years Drexler fought the prevailing wisdom that atoms could not be manipulated to make complex machines due to the interfering factors of uncertainty in quantum mechanics. Because atoms behaved more like erratic gnats than billiard balls one could not construct reliable devices out of them. Drexler countered that such devices already existed in nature. For instance, note the ribosome: 200 nanometers in length.

In his book Engines of Creation: The Coming Era of Nanotechnology (freely available online) Drexler described the applications of molecular nanotechnology. The chapter entitled Engines of Healing details the potential for MNT to provide radical life extension and the revival of cryonics patients. The book also describes the possibility of self-replicating nanodevices to cause massive terrestrial destruction. With that in mind, taking the necessary precautionary measures so that mature nanotechnology could be safely deployed became a central concern of Drexler’s efforts and led to his creation of the Foresight Institute in 1986, whose headquarters were located in Palo Alto. Taking all factors into consideration, one cannot overstate the thoroughness of Eric Drexler’s work in the foundational research, theory, and deployment of safety measures surrounding nanotechnology.

K. Eric Drexler

Listen to his recent presentation at the Singularity Summit here.

Unfortunately, the moniker of “nanotechnology” became a buzzword years after Engines of Creation and was co-opted by marketing forces hoping to glamorize almost any product involving nanoscale blobs. Clearly the so-called ipod nano is some orders of magnitude larger than a nanometer. By the common criteria, Drexler remarked, producing cigarette smoke would be a form nanotechnology. The phrase molecular nanotechnology then specifies the more complex designs detailed in Drexler’s engineering text Nanosystems: Molecular Machinery, Manufacturing, and Computation. These devices, when they are finally implemented, will be the smallest, fastest, most precise mechanisms allowable by the laws of physics.

Those who heard about nanotechnology in 1986 with the publication of Engines of Creation might hastily dismiss the importance of this developing area of scientific inquiry. Like strong artificial intelligence, nanotechnology has developed the reputation of being science fiction, that nanotechnology “is tens years away, and it always will be.” A number of developments at work today speak to the contrary. Nanotechnology will not always be ten years away. As Ed Regis notes in his engaging nanotechnology primer Nano, “Just try to stop it.”

In 2002, The Center for Responsible Nanotechnology was founded as a non-profit organization devoted to considering the ethical concerns surrounding the impending development of molecular nanotechnology. The company Nanorex is designing computer simulations of nanoscale devices so that when the physical technology is developed, engineers will have the tools needed to hit the ground running. And Zyvex, based in Richardson, Texas, the first private company focused on nanotechnology to earn a profit, has in their mission statement the goal of producing the world’s first assembler. They can count among their research scientists influential thinkers such as Ralph Merkle, Rob Freitas, and Eric Drexler. Considering the facts it should be obvious that nanotechnology will change the face of the earth within our time. It will change everything from the bottom up, and will test the sustainability of human civilization like never before.

Productive Nanosystems: From Molecules to Superproducts. The film, sponsored by Nanorex and the Foresight Institute, depicts an animated view of a nanofactory and demonstrates key steps in a process that converts simple molecules into a billion-CPU laptop computer.

Saturday, September 23, 2006

The Singularity

When intelligence on earth has hit a wall in computational capacity enforced by the laws of physics, the Singularity will have happened. Can we postulate on what kind of events will lead up to the Singularity? Several significant intellectuals already have.

In a nutshell

Vernor Vinge coined the term "technological singularity" to refer to the metaphorical event horizon of technological progress beyond which we cannot imagine. Vinge chose the word “singularity” purely as a rhetorical flourish to connote the inescapable gravitational singularity of a black hole. In his 1993 essay “The Coming Technological Singularity.” Vinge posited a simple logical argument so compelling that it altered the trajectories of science fiction and speculative futurism considerably. If human beings make a machine smarter than us, what is that machine likely to do with its intelligence? The answer, of course, is to build more intelligent machines. The outcome is a feedback loop that could have global intelligence evolving at the rates we currently see in computational complexity. The essay begins, “Within thirty years, we will have the technological means to create superhuman intelligence. Shortly after, the human era will be ended.”

In 1965, British statistician I.J. Good, known for his work in Bayesian statistics, envisioned a situation similar in scope to Vinge’s technological singularity, which he called an intelligence explosion. "Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever,” Good wrote. “Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an 'intelligence explosion,' and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make."

Even earlier, in a book published in 1927, a French Jesuit priest named Pierre Teilhard de Chardin saw the earth evolving into what he termed a noosphere. The earth was becoming “cephalized” in De Chardin’s view, meaning it was growing a head. Due to the increased communication of disparate groups of people by means of ever-evolving information technologies, the global human population would become increasingly “lovingly interdependent.” Outlined in The Phenomenon of Man, his vision of the future, in its focus on information technologies and a manner of global phase transition, thematically prefigures Vinge’s and Good’s. An excellent extrapolation of De Chardin’s thesis is available at Acceleration Watch. For those interested in investigating a sane confluence of technology and theology, De Chardin is a good place to start.

Perhaps the single most lucid essay on the subject was written by Eliezer Yudkowsky in 1996 and is titled Staring Into the Singularity. It begins:

If computing speeds double every two years,

what happens when computer-based AIs are doing the research?

Computing speed doubles every two years.

Computing speed doubles every two years of work.

Computing speed doubles every two subjective years of work.

Two years after Artificial Intelligences reach human equivalence, their speed doubles. One year later, their speed doubles again.

Six months - three months - 1.5 months ... Singularity.

Plug in the numbers for current computing speeds, the current doubling time, and an estimate for the raw processing power of the human brain, and the numbers match in: 2021.

But personally, I'd like to do it sooner.

Yudkowsky has made his life purpose the safe transition to a technological singularity by helping to found the non-profit organization The Singularity Institute for Artificial Intelligence and developing what he has termed Friendly AI. His emphasis is not on rushing headlong into a singularity, but painstakingly ensuring that the transition reflects to the best of our ability the most fundamental ethical standards of human civilization as determined by research into cognitive science, evolutionary psychology, and a host of other complex scientific disciplines. His non-profit is perhaps the most serious overt effort to protect humanity against a failed singularity, a relatively long-term but nevertheless immediate existential risk. It is also an effort toward protecting against the many other existential risks staring us in the face. The Singularity Insitute provides an online reading list that is both comprehensive and easy to navigate.

Technology innovator and proactive humanitarian Ray Kurzweil’s most recent book lays out an ideal gameplan to get humankind to a positive singularity sometime around 2048. It is entitled The Singularity is Near: When Humans Transcend Biology. Kurzweil predicts that the singularity will lead from various steps contingent one upon the other, progressing from biotechnology to nanotechnology to artificial intelligence and the singularity. For someone who would like to gain a comprehensive grasp of the technological innovations standing between us today and a future singularity, while suffering no unneeded future shock, Kurzweil’s book is an eloquent, life-affirming, and accessible explication of the singularity.

The accelerating rate of technological progress charted by Ray Kurzweil and leading to a technological singularity

Some researchers in artificial intelligence, like Novamente's Ben Goertzel for instance, see the possibility for a “hard take-off” if AI research is accelerated. In a hard take-off scenario, an infant-level artificial intelligence would progress to superintelligence in a very short span of time, accomplishing all the necessary technological achievements that would get human civilization to a singularity. Such a superintelligence basically would compress Kurzweil’s timescale down to a few years, or perhaps even months. The best possible outcome we could hope for is a hard take-off positive technological singularity happening sometime this evening. The further off the singularity is postponed, the greater the likelihood of an intervening existential threat ending the human experiment.

In short, if you have ever wondered what it’s all about, why even bother getting up in the morning, or is meaning to life, one rather obvious area to investigate is the coming technological singularity.

Now here is Ben Goertzel to explain to you how to get to the singularity.

Artificial General Intelligence and its Role in the Singularity

Ten Years to the Singularity (If We Really Try)

Thursday, September 21, 2006

Cultured Meat

Alternet in July of 2006 posted a decidedly paranoid response to the development of "in vitro meat," meat cultured in laboratories free of animal suffering. The article demonstrates that progressive news media may still latch onto bioconservative political stances. Cultured meat would provide a dietary source of protein free of growth hormones, pathogens, and animal cruelty. Yet the Alternet article couldn’t get past the concept that labs are “yucky.”

Scientists growing meat in petri dishes say it's safer, healthier, more humane, and less polluting. But can we get past the 'yuck' factor?

"The concept is as simple as it is horrifying," reported Traci Hukill for Alternet. "It seems like something out of a chilling sci-fi future, the very epitome of bloodless Matrix-style barbarism."

The Hukill article reveals the ease with which cultured meat can become popularly associated with toxic chemicals or bacteria because these are things you typically find in laboratories. Proponents of alternative protein sources await a PR campaign to remind people that there are no more sanitary conditions than those found in lab settings. Typically the term “barbarism” isn’t associated with scientific experimentation, though it is an apt description of the present-day conditions that cultured meat is attempting to supplant.

The Alternet article’s appeal to rationality falls apart the moment you compare the minor "yuck-factor" associated with vat-grown animal protein to what goes on daily in the meat industry. Since competition to reduce the price of meat, eggs, and dairy products has driven the worldwide trend to replace small family farms with large warehouses, animals are typically confined in crowded cages or pens or in restrictive stalls. Nine billion farm animals are killed each year in the United States to produce meat. Under a new system implemented in 1998, the US Department of Agriculture no longer tracks the number of humane-slaughter violations its inspectors find each year.

"Privatization of meat inspection has meant a quiet death to the already meager enforcement of the Humane Slaughter Act," writes Gail Eisnitz of the Humane Farming Association, a group that advocates better treatment of farm animals. "The USDA isn't simply relinquishing its humane-slaughter oversight to the meat industry, but is -- without the knowledge and consent of Congress -- abandoning this function altogether."

New Harvest is a non-profit organization working on cultured meat.

Handling animals humanely in a factory-farm setting is proving increasingly untenable, making cultured meat a reasonable solution to the problem of the ethical treatment of animals. In vitro meat would allow better screening against salmonella, mad-cow disease (BSE), campylobacter, E. Coli, avian virus, or any number of other harmful foodborne pathogens showing up in grocer’s freezers and fast-food restaurants. According to New Harvest, contaminated meats help contribute to 76 million episodes of illness, 325,000 hospitalizations, and 5,000 deaths each year. Together with animal feed production, meat production is responsible for the emissions of nitrogen and phosphorus, pesticide contamination of water, heavy metal contamination of soil, and acid rain from ammonia emissions. Because laboratory meat is grown under controlled conditions impossible to maintain in traditional animal farms, it is potentially safer, more nutritious and environmentally responsible than conventional meat.

It should be taken into account that in biomedical research growing mammalian cells has required a nutritious medium that includes fetal bovine serum. That is, serum taken from fetuses discovered inside cows after they’ve been butchered for meat at a slaughterhouse. Dutch researchers, mindful of the animal-friendly image cultured meat must maintain, have perfected a completely vegetarian growth medium, consisting of modified funigi, water, and glucose, making stem cells the only source of animal tissue in the process. Three Dutch Universities are contributing research toward developing meat from the stem cells of pigs. The universities of Eindhoven, Utrecht, and Amsterdam have transformed the cells into myoblasts. "Six years from now we might already have a product," says Dr. Henk Haagsman, Professor of Meat Sciences at the University of Utrecht. "No loin, yet, but indeed a kind of minced meat the catering industry can use in pizza's or sauces."

As stem cell research in the nascent field of regenerative medicine develops, it may become feasible to make adult cells undergo genetic reprogramming, making them indistinguishable from embryonic stem cells. At Howard Hughes Medical Institute Research to this end is already underway. Reprogramming somatic cells would allow for any tissue sample to be cloned, allowing you to grow your own tissue for consumption. In need of protein, hypothetically you could eat yourself.

New Harvest and the scientists at Dutch Universities are proving that yielding meat without the need for animal suffering is a near-term objective. Here is a simple solution to the problem of animal suffering that demands our consideration. If you would think twice about having your cat or dog for lunch, obviously the prospect of cultured meat represents a logical ethical alternative to killing animals altogether.

Wednesday, September 06, 2006

Memes

The "meme" is a term coined by Oxford zoologist Richard Dawkins in his book The Selfish Gene, published in 1976. Dawkins observed that ideas are subject to the same manner of evolutionary patterns as genes, undergoing diffusion, mutation, and extinction based upon their fitness in the surrounding environment. Ideas replicate when shared between people and undergo alterations due to mistaken and purposeful misreadings. Memes include any meaningful pattern of information, from ideologies, to clothing styles, to one-liners. A meme then can be considered a unit of cultural inheritance communicated from person to person like a virus. When you find yourself humming the tune of a popular new song, you are engaging in the transmission of a meme.

Merely by observing nature, Dawkins notes in The Blind Watchmaker, it becomes evident that genes are information carriers. Watching a willow tree raining down seeds upon the earth is enough to recognize that it is spreading instructions. "It's raining tree-growing, fluff-spreading algorithms. That's not a metaphor, it's the plain truth. It couldn't be any plainer if it were raining floppy discs." Memes operate in a similar fashion and are propogated continuously through our information technologies. Our radios, telephones, TVs and personal computers are wired to shower its users with memes.

In Great Mambo Chicken and the Transhuman Condition by Ed Regis, Dawkins' colleague N.K. Humphrey notes that memes are truly alive in that they are the expression of human thought. "When you plant a fertile idea in my mind you literally parasitize my brian, turning it into a vehicle for the meme's propagation in just the way that a virus may parasitize the genetic mechanism of a host cell. And that isn't just a way of talking--the meme for, say, 'belief in life after death' is actually realized physically, millions of times over, as a structure in the nervous systems of individual men the world over."

While the idea of a belief structure parasitizing the brain may seem utterly dehumanizing, our ability to recognize when and how certain memes influence our actions ultimately gives us greater agency over our behavior. The modern drive for individuality is indeed problematized by the fact that upon reflection all of our ideas come second-hand. This is not to say that some memes are not more derivative and cliched than others. Merely, originality becomes a matter of reorganizing traditional ideas into new patterns that hold up under scrutiny. Seen in this light, the development of human ideas can be understood as an evolving landscape of competing memes.

Eric Drexler writes in Engines of Creation, "At best, chain letters, spurious rumors, fashionable lunacies, and other mental parasites harm people by wasting their time. At worst, they implant deadly misconceptions. These meme systems exploit human ignorance and vulnerability. Spreading them is like having a cold and sneezing on a friend." Authoritarian governments are known to enforce the cultivation of memes that are harmful to the individual when they benefit the interest of the state. In that sense, authoritarian memes prove exceedingly effective in monopolizing its host’s mental efforts, shutting out any new, conflicting mode of thought. "Memes that seal the mind against new ideas protect themselves in a suspiciously self-serving way," Drexler writes. "While protecting valuable traditions from clumsy editing, they may also shield parasitic claptrap from the test of truth. In times of swift change they can make minds dangerously rigid." Obviously, as the only species endowed with a rational intellect, we have a certain responsibility to be mindful of the memes we spread and what ends they serve.

Monday, September 04, 2006

SENS

For those concerned with preserving life despite the many reasonable arguments to the contrary, Strategies for Engineering Negligible Senescence is a project spearheaded by Cambridge biomedical gerontologist Aubrey de Grey. SENS takes a goal-directed approach to eliminating aging, rather than a curiosity-driven approach to observing the body’s predetermined pattern of decline, malfunction, and collapse.

Aubrey de Grey defines SENS in contradistinction to traditional methods in geriatric treatment. Geriatrics attempts to stop damage from causing pathology, while biomedical gerontology would provide anti-aging medicine, rejuvenating the body rather than merely slowing down the aging process.

Aubrey de Grey, the face of SENS

SENS targets seven deadly causes of aging and proposes advanced treatments to counteract their effects. Here are the perceived problems and their required solutions:

1. Cell atrophy

Counteracting the loss of cells would require the addition of growth factors. Real traction cannot be made in this area without prevalent stem cell research. The Geron Corporation developed techniques for extending telomeres in 1998 and proved that they prevented cellular senescence, but a greater chance of developing cancer might be a trade-off of such methods.

2. Cellular mutations

Genetic mutations leading to cancer formation would need to be targeted. One possible method of reducing mutations is by introducing whole-body interdiction of lengthening telomeres.

3. Mitochondrial mutations

Mitochondria are prone to undergoing mutations due to their highly oxidative environment. The loss of mitochondrial function is thought to be a major cause of progressive cellular deterioration. To correct the problem, genes existing in the mitochondria could be preserved by copying them onto the chromosomes in the nucleus.

4. Cellular senescence

Programmed apoptosis would involve eliminating senescent cells through the intervention of cell therapies or vaccines, making room for healthy cells.

5. Extracellular cross-links

Chemical bonds between extracellular structures become brittle and weak with age. Enzymes and small-molecule drugs might be developed to break links caused by glycation (sugar-bonding).

6. Extracellular junk

This would involve removing the buildup of toxic substances such as Alzheimer’s-causing amyloid plaque. Junk found outside the cell might be removed by enhancing the immune system’s use of specialized cells called phagocytes, which serve to remove foreign bodies and thus fight infection. Microscopic surgical processes and small molecule drugs capable of breaking chemical beta-bonds might also prove beneficial.

7. Intracellular junk

Removing junk from inside of cells would require adding new enzymes to the cell’s natural digestion organ, the lysosome. These enzymes could be taken from bacteria or molds.

To accelerate research in radical life extension, the Methuselah Mouse Prize is being offered to scientists capable of extending the lifespan of mice. The prize is currently $3.6 million

Perhaps the main impediment standing in the way of SENS development is the entrenched position of death advocacy in our culture. Mistaking the necessity of death for an inherent human virtue, critics of SENS cling to any number of false justifications for the continuing existence of senescence.

"[T]he common wisdom is quite strong - even among friends and associates," writes SENS advocate Ray Kurzweil. "[T]he common wisdom regarding life cycle and the concept that life won’t be much different in the future than it is today still permeates people’s thinking. Thoughts and statements regarding life’s brevity and senescence are still quite influential. The deathist meme, that death gives meaning to life, is alive and well."

On the contrary, the struggle to eliminate senescence deserves our every effort. Even if SENS proves not to be up to the task, obviously we'll never know until we try.

Sunday, September 03, 2006

Existential Risk

With advanced destructive technologies becoming increasingly affordable to develop, error or deliberate misuse resulting in near-term human extinction has never before posed such an immediate threat. If you find discussions of this sort offensive, please read no further.

The threat of the end of life on the planet is what Oxford professor Nick Bostrom calls existential risk. The build-up of nuclear armaments during the Cold War first demonstrated that human civilization possessed the means to annihilate itself. The fact that no exchange of nuclear weapons has occurred, thanks in large part to the extreme hazard of mutually assured destruction, is a positive sign that our species has sense enough not to bring about its own destruction. Yet the prevalence of terrorist activities in this early chapter of the 21st century already signals that it takes less than the efforts of an entire rogue state to cause horrendous casualties.

The Doomsday argument sums up the problem rather succinctly. If the human civilization is to end as the result of an advanced weapon of mass destruction, this event would be most likely to occur when the greatest number of people are around. Considering you are on the planet and you are a human being, of all the eras in human history you are most likely to occupy, the final stage is most probable. What then are the sources of existential risks that might concern us?

At the top of the list, there’s the obvious risk of nuclear warfare. Michael Anissimov of The Lifeboat Foundation, a non-profit organization concerned with protecting against existential risk, recently published a list of ten targets of a nuclear weapon that would maximize global damage and chaos, adding some facts about the startling accessibility of uranium: “[U]ranium ore is more common than gold, mercury, silver, or tungsten, and is found in substantial quantities worldwide, including in southern Australia, Africa, and the Middle East. It is the 48th most abundant element in the earth’s crust. Pitchblende uranium (1% pure) is available on eBay for approximately $20/kg. The US Department of Energy has stockpiled 704,000 metric tons of uranium in the form of hexaflourine solids.”

In 1961, during the height of the Cold War, the Russians detonated the Tsar Bomba, a 50 megaton nuclear weapon. The Tsar Bomba mushroom cloud rose as high as 64 km (40 mi) above the ground of Novaya Zemlya, an island in the Arctic Sea.

Nick Bostrom notes that while a Nuclear Holocaust would not necessarily end the human species instantaneously, it would mark a setback in the progress of civilization that would not easily be overcome.

“Even if some humans survive the short-term effects of a nuclear war, it could lead to the collapse of civilization. A human race living under stone-age conditions may or may not be more resilient to extinction than other animal species.”

Another risk worthy of our concern is that posed by a genetically engineered biological pathogen. Recently the genome of the 1918 Spanish flu virus responsible for the deaths of 50 million people was published online. In a guest editorial printed in the New York Times, Bill Joy and Ray Kurzweil argued that thoughtlessly circulating the instructions for dangerous pathogens was a "Recipe for Destruction". “The genome is essentially the design of a weapon of mass destruction. No responsible scientist would advocate publishing precise designs for an atomic bomb, and in... ways revealing the sequence for the flu virus is even more dangerous.”

Kurzweil and Joy disagree in their approaches to the perils associated with accelerating technological progress. Joy promotes relinquishment, though he offers no concrete plan as to how the slippery slope of scientific advancement could peaceably be brought to a halt. Kurzweil argues in The Singularity is Near that the relinquishment of potentially dangerous technologies would require worldwide suppression by a global totalitarian regime. Only the continued development of more advanced therapies, such as RNA interference-based viral protection, can counteract the hazards posed by newly introduced deadly pathogens.

Audio of Nick Bostrom's presentation on existential risk at the Singularity Summit at Stanford University

An existential risk lying further down the road is the accidental misuse of nanotechnology. A self-replicating “nanobot” would be defined as a device made of nanoscale molecular components that can make copies of itself from materials in its surroundings. While the Foresight Institute, whose mission is to ensure the beneficial implementation of nanotechnology, has determined measures to ensure the safe use of nanotech assemblers in a laboratory setting, this does not address the potential for intentional misuse in an uncontrolled environment, leading to nanobots multiplying wildly out of control. Eric Drexler explained the threats associated with the exponential growth of nanoscale assembers in Engines of Creation and labeled the risk “grey goo.”

"Thus the first replicator assembles a copy in one thousand seconds, the two replicators then build two more in the next thousand seconds, the four build another four, and the eight build another eight. At the end of ten hours, there are not thirty-six new replicators, but over 68 billion. In less than a day, they would weigh a ton; in less than two days, they would outweigh the Earth; in another four hours, they would exceed the mass of the Sun and all the planets combined - if the bottle of chemicals hadn't run dry long before."

As an antidote to grey goo, Drexler proposed the use of active shields, global immune systems that could counteract the unregulated proliferation of assemblers. Recently Ralph Merkle and Michael Vassar in conjunction with the Lifeboat Foundation proposed plans for a Nanoshield to protect against self-replicating nanobots.

An often unforeseen source of existential risk is destructive artificial intelligence. An AI intelligence that was not programmed properly might simply fail to include human survival in its agenda. Technologists concerned with human equivalent machine intelligence cite an article written by Vernor Vinge in 1993, entitled The Coming Technological Singularity, as foreseeing a possible scenario for the creation of a godlike superintelligence.

“Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an "intelligence explosion," and the intelligence of man would be left far behind.”

The organization currently devoting its efforts to ensuring the safe development of AI is the Singularity Institute for Artificial Intelligence. The goal of the research team is to ensure that advances in the field of robotics reflect the humane values of human civilization.

The positive side of accelerating technological change is the possibility that human civilization might overcome the constraints forced upon it by centuries of environmental selection pressures. By creating technologies that enhance intelligence and improve the potential for mutual cooperation, our species may well continue to elude self-destruction. While relinquishment is most likely impossible to implement safely and counter to the human desire to expand knowledge, the continued development of human society obviously ought to be directed by the ethical imperatives that safeguard our continued survival in the face of existential risks.

Friday, September 01, 2006

Neurodiversity

Asperger’s Syndrome is a neurological condition named by Austrian doctor Hans Asperger. His child patients' fondness for demonstrating their detailed knowledge on a given topic made them resemble “little professors," tending to focus intently on a certain limited range of artistic or intellectual interests, while maintaining difficulty with social relations. The syndrome is listed as a pervasive developmental disorder by the DSM-IV, the Diagnostics and Statistical Manual of Mental Disorders though it does not correlate to poverty, suicide, crime, or below average intelligence.

Psychologist Bruno Bettleheim attributed autism to unaffectionate parentage, labeling the disorder the cause of distant and rejecting “refrigerator mothers.” Bettleheim went as far as to liken the condition of autistic children to his own experiences as a concentration camp prisoner at Dachau during World War II. Psychologists in the mid-20th century tended to classify Asperger’s syndrome as a special case of schizophrenia instead of mild autism.

As the long-held refrigerator mother explanation has gone out of fashion, the number of reported cases of autism has increased drastically. Autism is the fastest growing population of special needs students in the US, reported cases having undergone a 900% increase between 1992 and 2001 according to the United States Department of Education. An increasing number of parents reject the idea that autism could have a purely genetic basis, as the hyperbolic increase in reported cases could only be explained by environmental factors. Nine out of ten twins, however, seem to share the disorder. Some have blamed vaccines as having a causal connection to autism, though no hard evidence exists to suggest the link.

Dr. Hans Asperger described his patients as little professors

Wired magazine in a December 2001 issue reported that numbers of children diagnosed with Asperger’s had surged in Silicon Valley. “Over and over again, researchers have concluded that the DNA scripts for autism are probably passed down not only by relatives who are classically autistic, but by those who display only a few typically autistic behaviors.” There appears to be a subtle connection between the skills required of engineers and high-level autistic traits. Asperger’s patients tend to be drawn to organized systems, complex machines, and visual modes of thinking.

Asperger's patients also tend to engage in "stimming" behaviors to promote nervous system arousal. Stimming is a jargon term for stereotypy, a repetitive body movement that self-stimulates one or more senses in a regulated manner. Common forms of stimming among people with autism include hand flapping, body spinning or rocking, lining up or spinning toys or other objects, echolalia, verbal perseveration, and repeating rote phrases. Stimming is a controlled method of releasing beta-endorphins into the body and thus can be useful for jogging the memory or directing focus.

Reported cases of autism have risen drastically in the past decade

Although children with more severe forms of autistic disorder are mentally retarded, others with high level autism may have a genius-like ability in a particular area, such as computing complicated math problems in their heads, memorizing dates or trivia such as baseball statistics. As a matter of fact, those with Asperger’s syndrome tend to have above average intellects. The fact that impairment and enhancement exist under the same rubric of "autism" makes it difficult to discuss the condition rationally. Here I want to focus on the Neurodiversity movement which seeks to counteract claims that autism confers de facto inferiority.

"Maybe autism has not touched your life. Consider yourself fortunate. It's also probably just a matter of time."

—a dire warning from "Triple A"

The organization Athletes Against Autism, or "Triple A," has taken up the challenge of fighting the disorder. “When you get the diagnosis, you’re in shock,” reported hockey star Olaf Kolzig in second-person singular upon learning that his son had a case of high level autism. “When people find out they have cancer they know where to go and what to do. It’s not the same thing, obviously, but this is such a baffling disorder, and there’s no blueprint for where to turn.” The lack of outward affection signaled to the Kolzigs that something about their son Carson was atypical, who, at age ten, is still largely non-verbal. His mother explains, “Olie would return from lengthy road trips only to find that Carson showed no reaction to his father’s presence.”

For someone whose life revolves around extreme forms of physical contact, the introversion Kolzig discovered in his son is understandably disappointing. However, to suggest that mild autism is analagous to cancer strikes at the heart of the Neurodiverity awareness issue. One Aspie has fired back against such stereotypes with this youtube video, Asperger Syndrome: A Positive Perspective. Notice how "Aspies" are providing their own counterstereotype of those without autism as booze-swilling, sports-obsessed Neanderthals whose neurological condition is optimized for mating rituals and primitive tribalism. It may only be a matter of time before the emergence of Aspies Against Athleticism takes issue with Kolzig's attempts to body check autism back into conformity.

Professor Simor Baron-Cohen of the Autism Research Centre at Cambridge University noted that Isaac Newton and Albert Einstein displayed personality traits characteristic of Asperger’s syndrome. As a child, Einstein was a loner and tended to repeat sentences obsessively. Newton had few friends and could become so obsessive with his work that he frequently forgot to eat. Clearly there are setbacks to having such a neurological condition, but if autism is sometimes capable of conferring genius-level innovation and intellect for one’s pains, then labeling Asperger's a disorder might give us pause. Consider the case of Gilles Trehin, an autistic artist who designs entire cities. Is there really no place in modern society for his unique talents?

Gilles Trehin beside a sketch of Urville, the imaginary city he created

How about letting it be up to the individual to decide whether or not his condition constitutes a disorder? Clearly many with Asperger's syndrome are comfortable with their minds the way they are and are using the net as a forum to make their contentment publicly known. In certain cases, autism clearly provides neurological benefits, which need not be pleasant to be meaningful. Treatment for severe cases of autism ought to be optimalized, but without alienating the valid contributions of autistic human beings to our multifaceted civilization.

As a bulwark against mental illness, as seen in the phenomenon of Hikikomori in Japan, the autistic community should take it upon itself to develop digital communications as a refuge from social isolation, following the lead of groups like Aspies for Freedom. Aspies hopefully realize that failing to take care of their own will only provide unsympathetic neurotypicals with ammunition for continuing to claim their inherent superiority. On the broad spectrum of possible brain configurations that modern civilization can handle, obviously there is ample room for the meaningful participation of autistic minds.